AI Thievery and the End of Humanity by Ing

AI technology came seemingly out of nowhere just a hot minute ago, and suddenly it’s everywhere—and it seems like it’s either the end of the world or a dawning utopia depending on who you listen to.

A family member sent me this article the other day, and it got me thinking about how people on both sides of the AI debate are getting it wrong. And also at least a little bit right. Perspective: My books were used without permission to train AI models. What now? [URL: https://www.deseret.com/opinion/2025/05/03/ai-engines-used-my-books-without-permission/%5D

As the title suggests, the author is not a fan. And for good reason.

It’s a simple fact that that at least some of the organizations that are making and training AI models didn’t ask permission to use any of the material they trained their large language models on. Most of it, they arguably didn’t have to. It was on the internet because it was intended for public consumption.

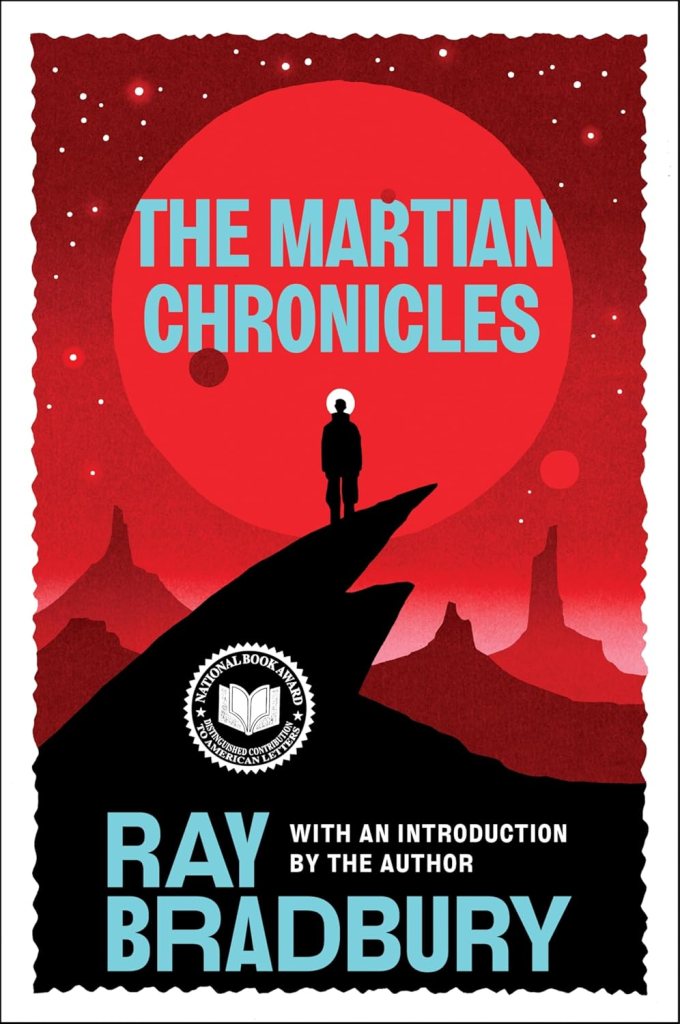

However, some portion of it WASN’T intended for free public consumption. People pirated books written by the author of the article above (along with many others), and the LLMs scraped the contents of entire pirated archives along with everything else. Which means they illegally used vast amounts of material. But because digital materials and copyright are somewhat abstract and the brobdingagian scale of the theft boggles the mind, very few people can be made to care.

What should be done?

The organizations that trained AI models on that material need to make it right by paying royalties and/or licensing fees for what they illegally copied. And if they won’t voluntarily make it right by paying royalties and licensing fees for what they illegally copied, then they should pay steep penalties on top of it.

How to do it, I don’t know. But the burden should be on them, not their victims. And it should hurt. If it puts some of the companies developing AI or even all of them out of business, well, tough titties. I don’t think it will, but if it does, fine. They should’ve thought about the consequences before they stole all that stuff.

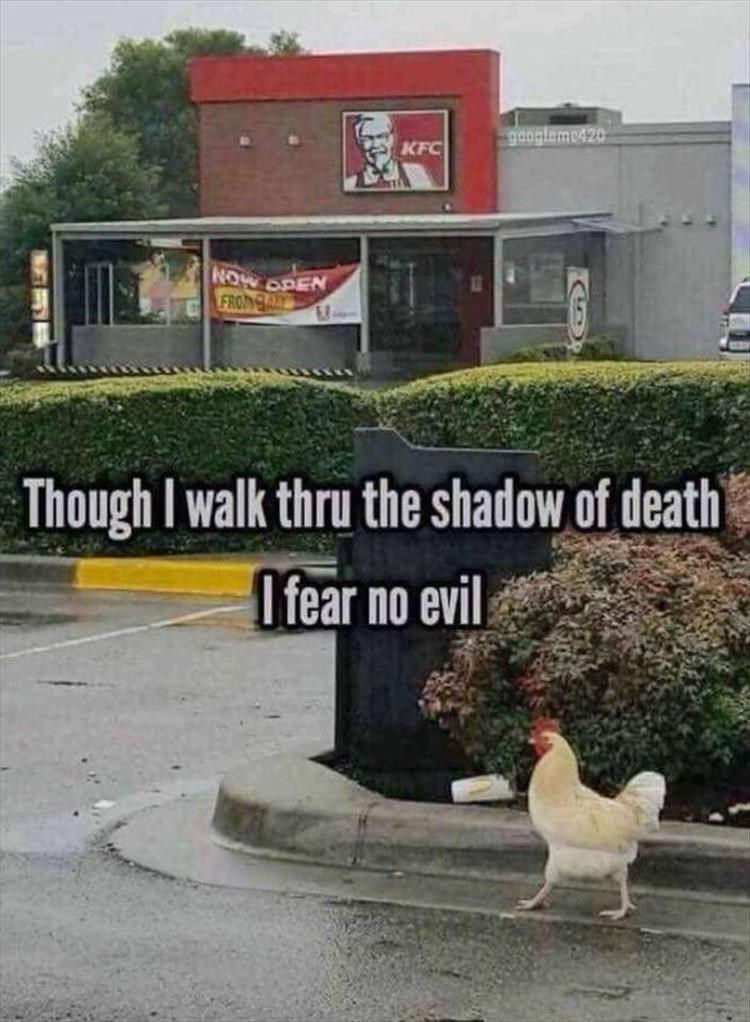

But the people who have been injured here, and AI opponents in general, aren’t helping themselves by framing its emergence as some kind of technopocalypse. They’re throwing around phrases like ”making people stupid and making truth irrelevant” and talking about limits and guardrails, but the horse isn’t even on the track. The horse has left the barn, the chickens have flown the coop, and neither protest nor wishful thinking will reverse the event. AI is not going away.

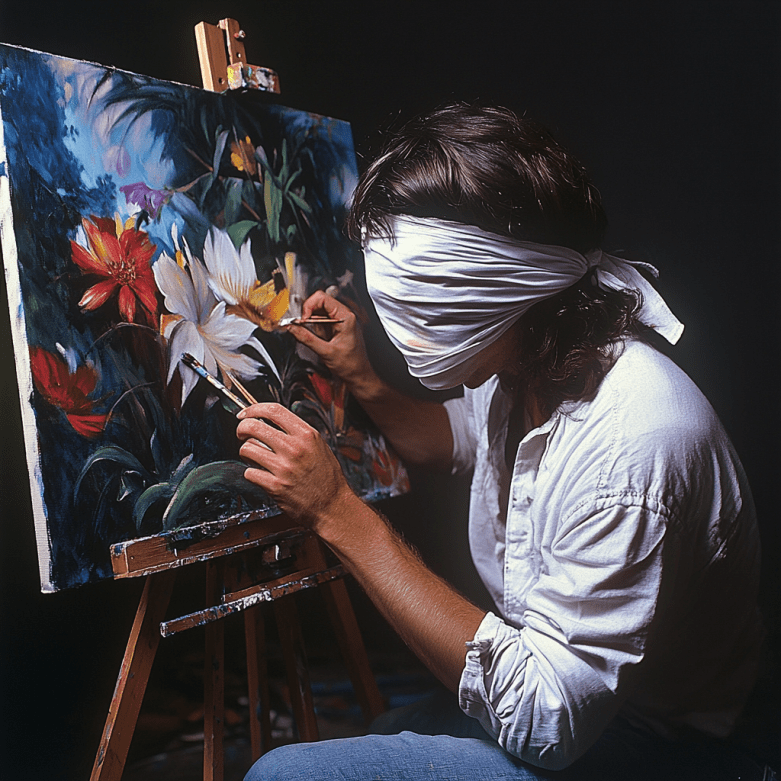

You could bankrupt every company that’s currently working on it, and somebody else would still pick it up and start it up again, because it’s VALUABLE. People can make money off it. Big businesses can make big money. Smaller businesses can make smaller money. All sorts of individuals and organizations all over the place can can make their lives easier and do more work with less time and effort. Or, yes, “create” some cool thing despite having zero talent and no desire to develop the craft (see: me, visual art).

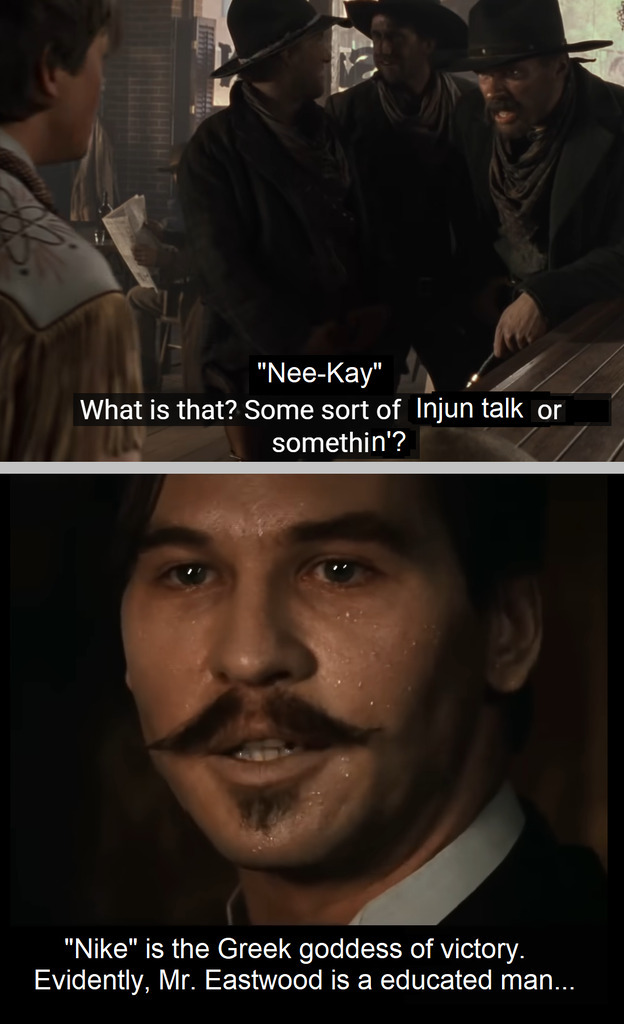

It’s unfortunate that the writer of the article I linked above highlights a legitimate problem—thievery on a grand scale—and then descends into hysteria. (Calling it AI doesn’t help. Artificial it is, intelligent it ain’t. Large language models—LLMs—are just a mass-marketable form of machine learning, which is a concept that has been in use for a long time.) This new technology isn’t going to destroy culture itself or the human mind any more than the printing press, computers, the internet, or the Industrial Revolution could.

But those things did irreversibly alter our cultures and way we interact with the world. And this technology is developing with far greater speed.

It reminds me of something broadcast news legend Edward R. Murrow said back in the 1960s, when people were starting to wonder what the recent advent of computers would do to society: “The newest computer can merely compound, at speed, the oldest problem in the relations between human beings, and in the end the communicator will be confronted with the old problem, of what to say and how to say it.”

LLMs (I hate calling it AI) are a heck of a technology, in that they actually CAN, to some extent, remove the old problem what to say and how to say it…but unless they evolve into something else entirely, they can’t absolve you of the need to consider whether you SHOULD say it. The “oldest problem in the relations between human beings” will remain, and that’s the gap of understanding.

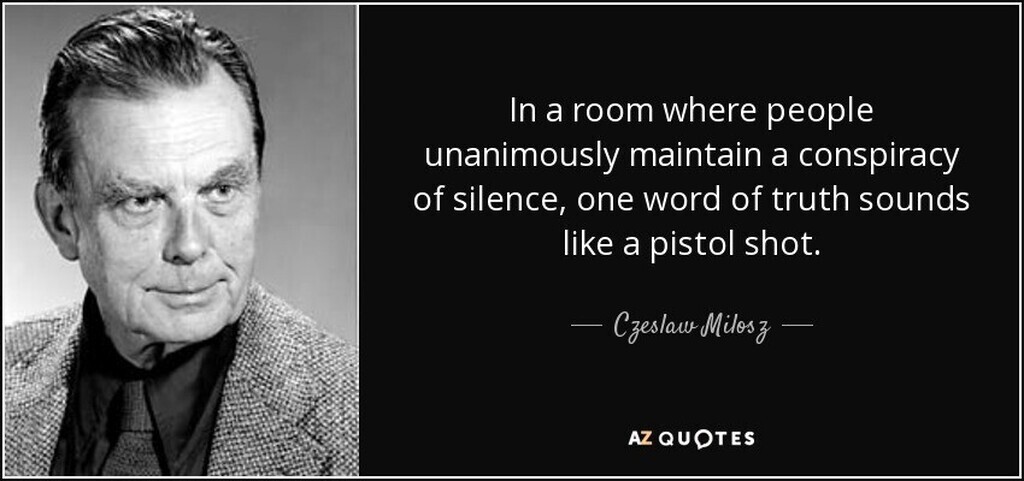

The problems inherent in figuring out what you believe and who and what you can trust are about to be compounded at speed. Again.

So, as our poor wronged author asks above, what now?

Well, we do need to impose consequences for the thievery that occurred during LLM development. That’s a Herculean task by itself, and I have no ideas on how it ought to be accomplished, but I don’t think fearmongering is going to make it any easier.

And if you’re worried about where this technology is going to take us, so am I.

We should probably all be at least a little bit worried about that. If you’re not uneasy about some of the uses people will try to put it to—especially the ones in various governments, with their talk of “guardrails” and “misinformation”—you’re not paying attention. (If you haven’t yet, check out what Marc Andreesen told Ross Douthat after the 2024 election, especially the part about what the Biden junta wanted to do with the AI industry: https://www.nytimes.com/2025/01/17/opinion/marc-andreessen-trump-silicon-valley.html.)

As for the worry about AI taking away people’s jobs, it probably will take some jobs from some people. New technologies tend to do that. The company I work for uses machines to do all the soldering humans once did, almost all of the placement of parts on printed circuit boards, and almost all of the previously tedious, painstaking, and error-prone inspection processes. Yet humans still have jobs in those factories—jobs that are a lot more humane than factory work used to be. New technologies tend to do that, too. (I once was a human doing soldering by hand on a production line, and man, am I ever glad we’ve got robots to do it now.)

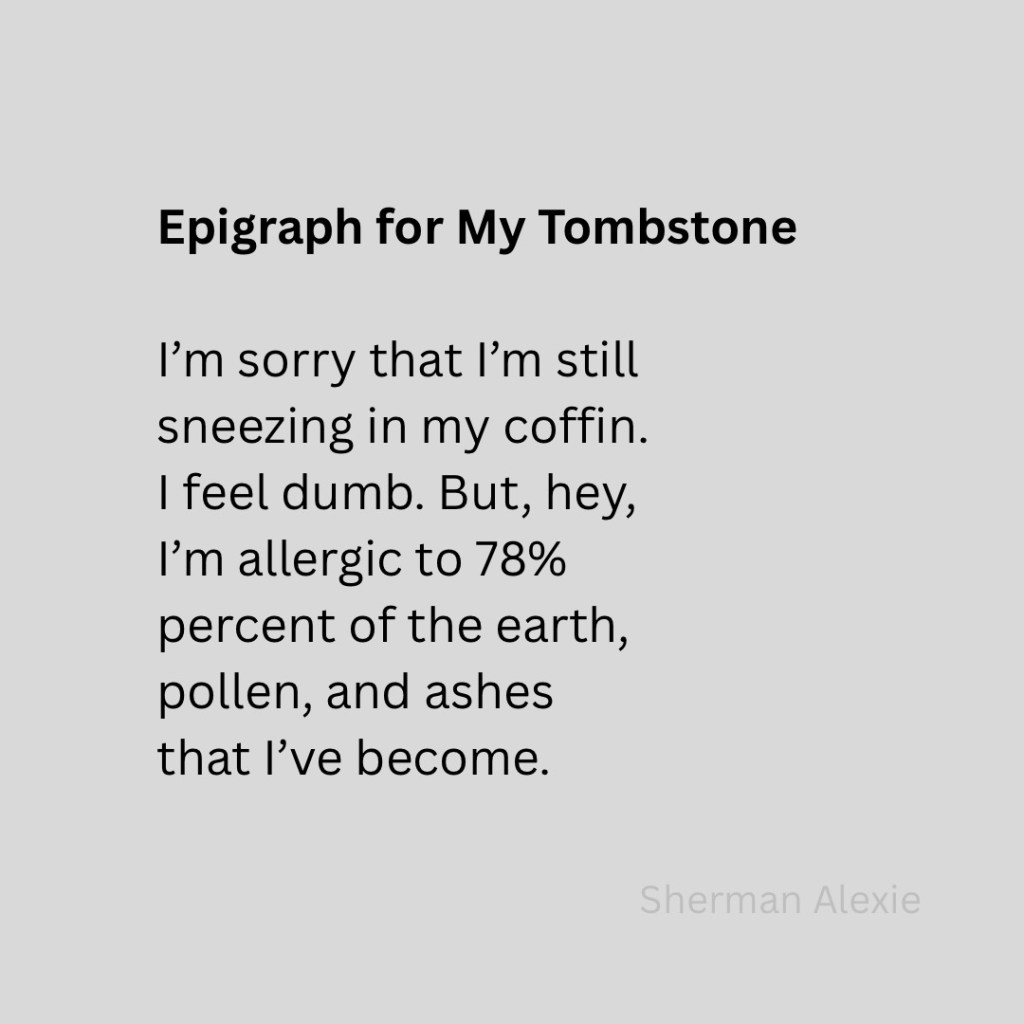

I used to scoff at the possibility of AI taking my job (I played with some early versions of ChatGPT in 2020, and they were pathetic), but now it looks like there might be a real chance that it could. If not the entire job, at least the part of it I enjoy the most, which is putting words together to convey valuable information to other people.

It can’t yet. But a lot of people want it to. Earlier this year, I got a freelance writing gig from a university I used to work for because they tried to have ChatGPT do a particular writing job last year—and they got slop. Grammatically correct, but slop. They used it, but they also realized the product needed human discernment and creativity, so this year they ponied up the money to pay a human. But what if next year’s budget doesn’t have the money? What if they try AI again and the result is actually good enough?

My day job may be resistant to takeover, as it requires both specific technical knowledge (not very much, but some) and creativity (arguably not a lot, but some). But it’s not out of the question that as AI models improve, they might be capable of synthesizing technical information, a campaign guide, and the appropriate vocabulary well enough to get an effective marketing message across. And there’s 90% of my job, lost to automation. I wouldn’t like it, but I really couldn’t blame anyone for making that decision.

So, again…what now?

For now, I’m approaching this the way I approach most innovations: cautiously. I have less than zero trust in Big Tech (they’ve earned it), so I’m shunning all the AI “enhancements” to my consumer products, which have always worked perfectly well without it. (Are there any that work better with it? I seriously doubt it.) And I’m slowly and somewhat reluctantly experimenting with ways LLMs can save me time or make certain things easier—mostly at work, where there are IT and security professionals who have approved certain apps and where I stand to gain or lose the most monetarily by knowing (or not knowing) what the technology can do. And where, if a certain Big Tech behemoth screws anybody over for its own profit, it’s not me personally.

I might end up crafting prompts and editing a machine’s output for a living, which would make me feel sad, but I guess I could deal. I was a kick-ass copy editor in my day and I still have some skills that a computer can’t develop. Yet.

In conclusion, while the advent of AI and LLMs presents significant ethical and practical challenges, it is crucial to approach these developments with a balanced perspective. The unauthorized use of copyrighted materials for training AI models is a serious issue that demands accountability and restitution. However, framing AI as an existential threat to humanity may hinder constructive dialogue and solutions. Instead, we should focus on implementing robust regulations and ethical standards to ensure fair use and protect intellectual property. As AI continues to evolve, it is essential to remain vigilant and adaptive, leveraging its benefits while mitigating its risks. Ultimately, the future of AI will depend on our collective ability to navigate its complexities with wisdom and foresight.

(Final note and disclosure: I fed the rest of this essay to Copilot and told it to write a conclusion for me. Haha! Never let it be said that I can’t adapt! Also, “implementing robust regulations”? Pshaw! I hope you realized that wasn’t me talking. It just now occurred to me that I might’ve got better results by telling Copilot to emulate my writing style (could it? I dunno), but maybe its best that I didn’t. Don’t want to give it any ideas about usurping my role as the thinker in this relationship.)

*This is Sarah. Unfortunately my work too was pirated to train LLMs. Am I on the warpath about it? No. Am I pissed? Yes. Because the LLMs belonged to small, scrappy companies like Meta and such. So, why am I not on the warpath? Well, because humans get my books to train new writers all the time. So they owe me the price… of a copy. I’m pissed because it’s paltry of them to balk me of $4.99. But it’s not like it would change my life. Eh. – SAH.*