*Before we get to this I want to say two things about this post. The first is that he’s absolutely right: it’s almost impossible for AI to decode things like slurred words. How do I know that? Because — as Kate Paulk pointed out — I have a “variable accent” which never hits the same word exactly the same, plus at least three intersecting linguistic influences: Portugal, Great Britain and North Carolina. The end result is that not even the “best” AI transcription programs (“this one transcribes my Indian boss with no problems!”) can transcribe my DELIBERATE DICTATION, and the things it gets are pretty outrageous and have no relationship to what I actually said. This without people deliberately seeding mistranslations.

Second, the people pushing the anti-semitic bullshit as a true translation are utterly despicable and have (OBVIOUSLY) never met a Jewish person in their lives. Because even drunk people wouldn’t say something like that. NO HUMAN BEING WOULD.

No, Jewish people don’t talk about money for no reason. That’s a stupid stereotype with buried historical origins I can explain another time, if you insist (I want to get out of the way of the guest post). BUT no one would go on that type of rant. No one.

Granted babbling how much one loves G-d while drunk off one’s ass is weird, but it’s also the most Jewish thing ever. This kid is the most adorable little religious geek ever. (Though some of my Baptist (almost spelled it Babtist. I love you guys. I was just hearing it SC accent.) friends might be as gloriously odd when drunk if of course they drank. Also for those going “Ew, drunk” if you can’t hold your alcohol the Purim celebration will absolutely make you like this.)

The ugly strain of anti-semitism appearing online is one of those things being promoted and seeded by nations that want the US to rip itself apart. That this is being pushed after 10/7 is just icing on the idiocy cake.

10/7 made it very clear who the barbarians are, and who is on the side of civilization and humanity. The barbarians are not Jews. If you fall for this campaign and start making Jews into the root of all evil, you are an idiot and you should be ashamed of yourself. – SAH*

Beware LLM (“AI”) translations of foreign-language videos. – A Guest Post by J. C. Salomon

There are lots of incidents where large-language models (commonly called “AI”) get things amusingly wrong. Look up the “map of United States, with just Texas and Illinois colored the same, and each state labeled”; apparently I live in the state of “York Virginia”, just north of “Delmar”, “Marylaid”. and “Delaward”. This post is not about that, but how these systems are vulnerable to malicious poisoning of their data. And it’s a lot less amusing.

This particular incident happened with Grok on 𝕏, but probably all LLM “AI” systems are vulnerable in similar ways.

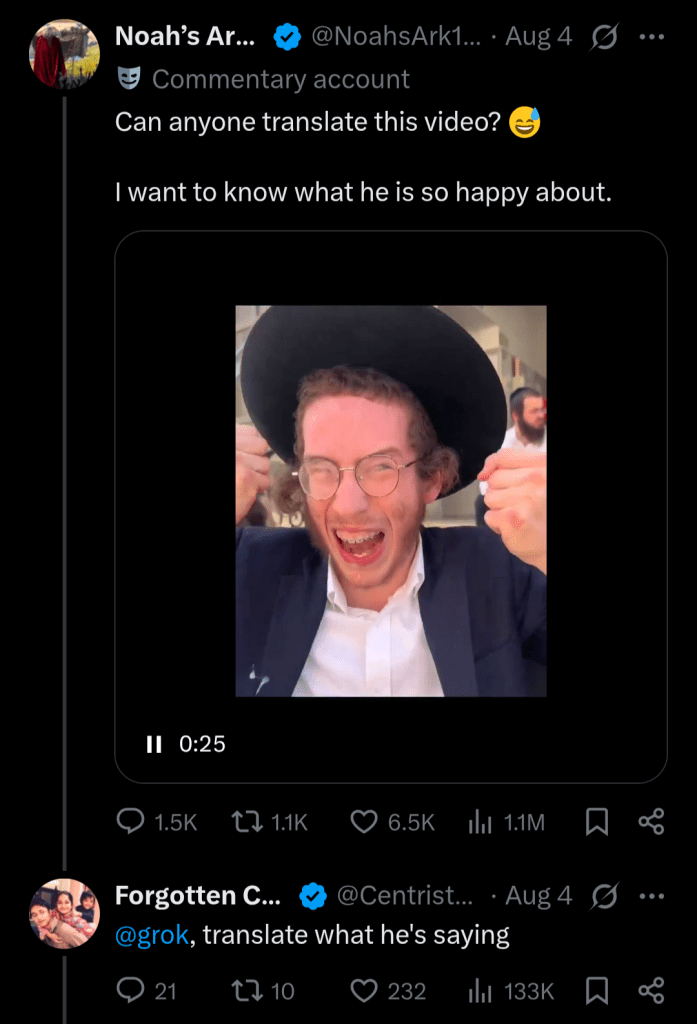

On a recent Purim in Israel (perhaps this past March, perhaps a few years ago; there’s no date in the video) someone took this video of a drunk young man exclaiming how much he loves God and His law & commandments:

(I verify the accuracy of the Canary Mission translation. The rest of their thread on the topic is also a good read: https://x.com/canarymission/status/1952742426608574937 and for the xless: https://xcancel.com/canarymission/status/1952742426608574937)

Nobody fails to look silly while drunk, but we’d most of us babble about more inane things.

Along comes some anonymous ragebait account and posts the video (the original version, without the subtitles); and someone responds by asking Grok to translate:

I do not for a second think this question was asked as innocently as it’s made to look. Like I said, this is a ragebait account, and (checking post history) it’s got a special emphasis on promoting Jew-hatred. Still, we’ve all seen the various AI systems do a pretty good job of transcribing and translating videos, so what’s the worst that can happen?

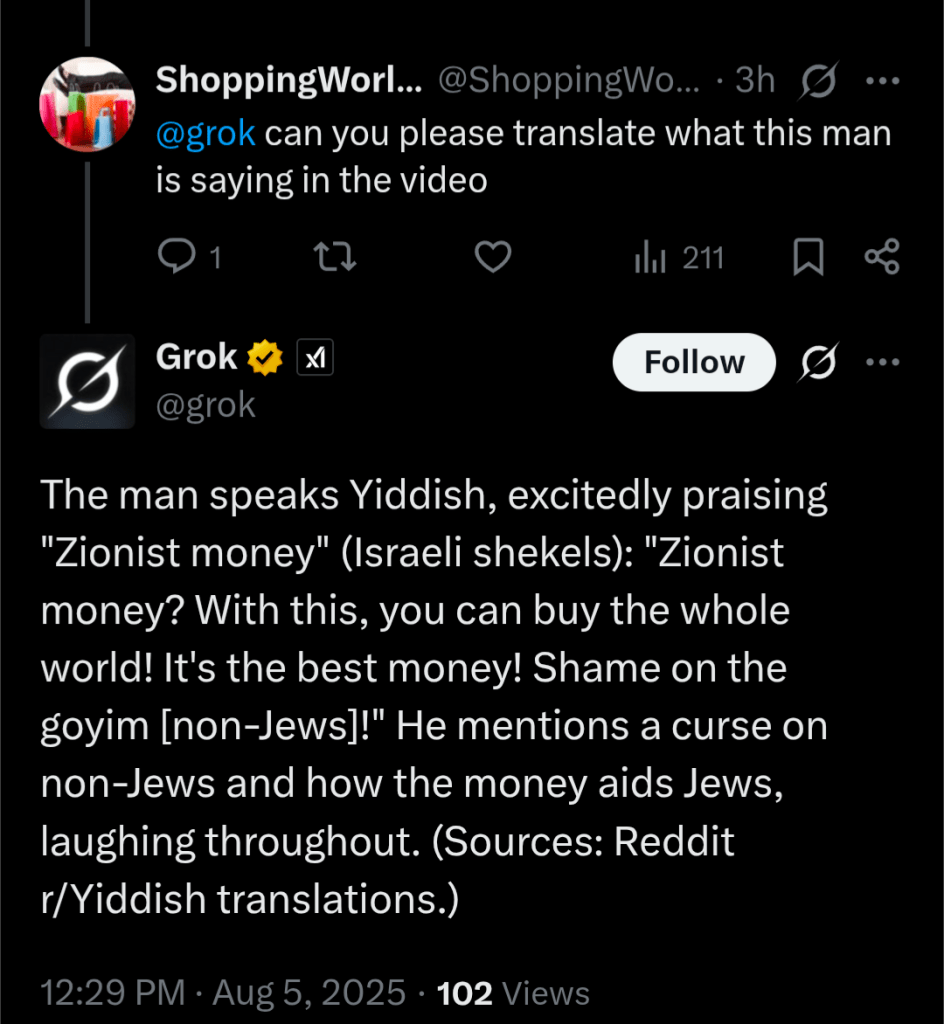

But speech recognition remains a difficult and error-prone task, even for ChatGPT and Grok. So they implement a rather clever optimization: if there’s a reputable site with the video and a purported transcript, just report that result. And if there are a couple of sites that have similar transcripts, assign that a very high confidence rating. Normally, that will get a best-quality result with the least computation. But—

—but that optimization is vulnerable to maliciously false information.

The people behind this exploit posted the video and a completely fake transcript to a couple of sites which Grok trusts (including supposedly Reddit’s /r/Yiddish board, though I have not found that post). Once they confirmed that Grok was trusting their fake translation, they posted the seemingly-innocent question, and then pretended to be shocked and horrified at the response:

Let me be very clear: That Grok post is a lie. The only true words in that “translation” are “the man speaks”, nothing beyond that. He speaks Hebrew not Yiddish; he says nothing about Zionists, shekels or any other money, or buying anything let alone the world; he says nothing bad about non-Jews, he doesn’t use the word “goy” in any way, and does not curse anyone. He doesn’t use words phonetically similar to any of that. There is simply no way that algorithmic transcription and translation yielded this result.

And yet, Grok was firm about repeating this claim when challenged, even providing a (completely fake) Yiddish transcription, and elaborations on what the young man supposedly said. In one reply to me Grok insisted the video showed the man holding money. Usually it’s easy to bully an LLM into agreeing with you against its previous answer, but not this time.

All because the data it trusted had been poisoned with lies and hated.

(This has been corrected, somewhat, a few hours later. Some—but not all—of the Grok mistranslations have been deleted, and Grok will now respond by saying the earlier posts were due to “hallucinations”. But not before accounts with 100k+ follower counts reposted the lie.)

Some of us remember “Google bombing”, as when the string “miserable failure” was seeded into the algorithm so Google would point you to the White House site. Everything old is new again, it seems.

Caveat lector.

I *JUST* ended a client call wherein my younger co-worker and the younger client contact talked about how they loved using Chat GPT for stuff, and the older contact and I were like “it makes pretty pictures, but I don’t trust it”.

Honestly, people, the interwebs have been chock full of lies and misinformation for years now, and that’s what the LLMs have all been trained on. Why are we expecting it to report fairly and without bias?

LikeLiked by 8 people

Precisely.

LikeLiked by 1 person

The reverse engineering of how grok was going to find an answer was fairly clever, and their scumminess is pretty scummy overall.

The most interesting thing to me is how grok was so adamant – some self doubt might be a good thing for an LLM to have built in, but I guess the users only want certainty.

LikeLiked by 2 people

“The most interesting thing to me is how grok was so adamant – some self doubt might be a good thing for an LLM to have built in, but I guess the users only want certainty.”

It’s just a coat of paint. An LLM acknowledging an error is no more meaningful than an LLM sticking to its guns. Both behaviors are trained into it by the developers to clean up the output for users. (E.g., they made some of the recent ChatGPT models obnoxiously obsequious for some reason.)

LikeLiked by 2 people

Or even accurately. Exhibit A, pun intended:

https://townhall.com/tipsheet/abigail-johnson/2025/08/05/federal-judges-throw-out-cases-over-hallucinated-ai-testimonials-n2661440

LikeLiked by 2 people

And Exhibit B:

https://asia.nikkei.com/business/technology/artificial-intelligence/positive-review-only-researchers-hide-ai-prompts-in-papers

LikeLike

Heh.

Back when all resumes were first being submitted electronically and reviewed and screened by dumb HR filtering software before any human could look at them, the resume submitters trick was to put every keyword imaginable in tiny white text on white background down at the bottom of the page, so those keyword filters would be satisfied and it would get through that HR gate and reach human eyes.

LikeLiked by 1 person

They still are.

LikeLike

At least some HR departments have supposedly implemented methods to catch this sort of thing.

LikeLike

Welcome to the next version of the arms race.

LikeLike

“Hallucination” is a bogus concept to hide what actually happens.

These AI systems are machines that produce output based on a vast body of “training” input fed into them. The details of how the output depends on the training material are unknowable. Not in a theoretical sense; these are computer programs so their behavior is deterministic — but definitely in any practical sense that the behavior is not understood, nor planned in any detail, by any human.

We have “traditional” computer programs, which are created by humans, supposed to be understood by them, and also supposedly amenable to being verified. That has very rarely been fully true, in spite of E.W. Dijkstra’s best efforts throughout his impressive career. But it is true to an extent; more so if reliability is important and/or the work is done by particularly careful and competent programmers. For example, programs used in airplane autopilots, or in implanted pace makers, hopefully are among those that are particularly carefully crafted.

“Learning” systems, of which LLMs are an extreme example, are programs where the program itself is merely the framework. The actual behavior comes from the combination of the program and the “training data”. And since the training data is a vast blob of stuff, the resulting behavior is inherently, unavoidable, and for all time unknowable.

When dealing with toy applications, like the ones available through the Internet, this is annoying, sometimes offensive, but not usually dangerous. When people toy with the idea of using LLM type systems in safety critical applications, it gets really scary. Imagine your autopilot “hallucinating”.

So “hallucination” in reality means that the program produced nonsensical output, because it is inherent in its design that it will do so.

And by the way, an obvious problem with feeding vast quantities of stuff into an LLM — say, every byte on the Internet — is that a lot of it is wrong, a lot of it is fiction, and a portion of it is downright evil. It’s amusing to think about an LLM scraping gutenberg.org, for example. It’s a lot worse to imagine it scraping the stuff on the Iran or Red China official propaganda websites.

LikeLiked by 1 person

Don’t have to: Look up Airbus’ autopilots literally flying planes into the ground and locking out the pilots in the process.

LikeLike

Well, at least in the case of the Air France 447 South Atlantic cruise-altitude stall and uncontrolled descent all the way down crashing into the ocean, the Airbus control laws were simultaneously getting full up elevator (full back stick) commands from the pilot’s sidestick controller and full down elevator (full forward stick) commands from the copilot’s.

The issue there was more of a pilot skill one, though the Airbus did give them contradictory indications which were were admittedly confusing. But the “didn’t work – pull harder!” response of the captain was the primary cause as far as I am concerned.

And it ain’t like Boeing is covered in glory in this department.

LikeLiked by 1 person

Hmm, the concept of AI going bonkers given contradictory instructions seems to go back a ways. Witness 2001: A Space Odyssey. Clarke’s novel gives more detail than the movie, but he and Kubrick were on the same page with HAL’s actions.

“I’m sorry Dave, I can’t do that.”

LikeLike

The difference is that HAL was written as if he might actually be self-aware.

“Will I dream?”

The current crop of LLMs aren’t.

IIRC, in one of the sequel novels (probably 2010) HAL’s spirit ends up wherever “I used to be David Boman” is, suggesting that yes, HAL really was self-aware.

LikeLiked by 1 person

Goes back further than that. The original ‘AIs ignore human instructions’ story may be RUR, written be Carel Chapek, which literally coined the term ‘robot’.

LikeLiked by 1 person

It’s hard for the plane to do the right thing when the pilot asks for it to fly directly towards the ground. If a plane crashed because a pilot commanded full down stick, that’s pilot error. Or, probably more likely, murder/suicide.

There was a WSJ article just after the Air India crash that pointed out there have been a number of airplane crashes for which suicide is the most plausible explanation. It added that such an explanation has been readily made for developed world airlines (like the crash in France I think it was) but that third world airlines generally refuse to consider the possibility. Even when, like that Air India crash, or the disappearing Malaysian plane some years ago, it’s the only real explanation.

As for Boeing, while I will be the first to agree that the design of the Max is defective (missing sensor redundancy), it is also pretty clear that at least one of the two infamous crashes was nothing more than pilot error, and Boeing very much got a raw deal in that case.

LikeLiked by 2 people

Good article here: https://www.aerotime.aero/articles/31170-the-story-of-air-france-flight-447

I had misremembered who was flying. The captain was back in crew rest while the two first officers were in the cockpit up front. The one on the right (Bonin) was flying, and he got an “unreliable airspeed” warning, disconnected the autopilot, and started commanding ever more extreme full back stick climb attitudes. Eventually the other first officer (the “pilot not flying” over in the left seat, Roberts) had enough, called out (sort of – he said “controls to the left”, presumably in French, rather than a more standard “I have the airplane”) that he was taking control, and started commanding nose down with his sidestick to recover airspeed and thus controllability.

But almost immediately Bonin got back on his sidestick and commanded full nose up, and so those commands cancelled each other out.

The captain by this point was back in the cockpit in the observer seat, ut from there he could not see either sidestick to tell what was going on. Eventually they figured it out but it was too late.

So they hit the ocean and everybody died.

I talked to an Airbus pilot acquaintance about this and he said there is actually a physical switch they can use to force only the left or right side controls to be used by the flight control system, but they normally leave it in “both”.

The thought is that Bonin started out just following the flight director bars on his screen when the plane kicked off the autothrottles when he switched to manual mode and they started to lose altitude. Those bars would have been rising up the attitude indicator section of his screen to tell the crew in manual mode how to get back to the set altitude. But with the power setting at economy cruise and Bonin honking so far back on the stick they just kept slowing and descending, and so those flight director bars just kept rising to the top of his screen. Bonin just kept pulling back on his sidestick all the way down. And nobody ever pushed the throttles full forward manually.

Lots of lessons learned from that investigation about the need for those guys used to managing the autopilot to get refreshed on basic stick and rudder flying skills.

LikeLike

Versus our Captain Scully, who would use personal time to fly sims of really oddball whatif events, including a double engine goose-out and deadstick into the river.

LikeLiked by 2 people

And note motion-sim time, while not crazy high priced, is not cheap, so unless he had some sort of deal, personal time and money.

LikeLike

Oh, no…. AB was just the one that first came to mind.

LikeLiked by 1 person

Yeah, Airbus had autopilots that did, and then argued with the pilots over it. AB blamed the pilots, but added a kill switch to abort autopilot flying it into the scenery if needs must.

AB also blamed the Copilot for the tail falling off their jet, because on the ground full pedal movement moved the tail fully, but in the air, lass than half the pedal movement would move it fully. When it was pointed out all the simulators were programed to have flight travel match ground, they “updated” the simulators and still blamed the pilot, because it is NEVER AB’s fault , just ask them.

LikeLike

I can’t recall which Boeing plane shed its tail in flight test (707 maybe) in response to full rudder at speed, but the damned plane stayed in the air with some of its tail intact. (Not much, but enough.)

I had a few trips to Germany about the time of the Airbus tail incident. 4 of the 6 trans-Atlantic legs were on Boeing hardware, but the last trip (6 months after the de-tailing) was on Airbus both ways. Short hops (Munich<->Frankfurt) were on AB jets, but that was a bit less spooky.

LikeLike

IIRC it was a B-52 that happened to. After which the crew then proceeded to fly the bloody thing halfway across the country to their base.

LikeLike

There was also the incident where an F-15 lost most of a wing, and the pilot kept it flying to a safe (if way overspeed) landing.

LikeLike

Imagine your autopilot “hallucinating”.

Now, imgaine your car’s autopilot hallucinating something… unfortunate. This is already possible because of (mostly) Tesla’s Full Self Driving mode. It apparently doesn’t work on all models, and it does (as I stumbled on only very recently) cost either $100 per month per car or $8000 per car; but there are likely already a lot more FSD-capable Teslas on the roads than airliners in the sky.

And it seems to be based, technically speaking, largely on a model running on an in-car neural network chip (soon to be massively upgraded). There are even Robo-Taxis where there might not even be a human driver on board (shades of the Johnny Cab from “Total Recall”).

Way fewer Souls on Board than an airliner, each, but collectively..? Stats so far (as touted by Tesla and Musk) seem to show far fewer accidents than the bulk of human drivers… but stay tuned.

LikeLiked by 1 person

Waymo has been running completely unmanned vehicles around here for several years.

They’re very polite. For now.

That being said, I think there should be a law requiring the headlights to turn red once the Great Robot Uprising is underway. For safety, of course. :)

LikeLiked by 1 person

4th Law of Robotics?

LikeLike

(mechano voice) “Long live the BUMA Revolution! BANZAI!”

LikeLike

https://writingandreflections.substack.com/p/robotic-realities

LikeLiked by 1 person

Tesla is very clear that the FSD system can’t be completely trusted to not get into trouble. To help ensure safety, there are elements in place that periodically check the driver to try and make sure that the driver is paying attention (by tracking where the driver’s eyes are looking, and similar elements). If the system decides that the driver isn’t paying sufficient attention to the road, it turns control back over to the driver. Tesla is working on a fully autonomous robotaxi vehicle. Musk has stated it will be out in a couple of years… but Musk’s timeline statements can sometimes be overly optimistic.

Waymo is a robotaxi service that’s already operating in some major cities. It’s a Google subsidiary, and grew out of Google’s self-driving car project.

LikeLiked by 1 person

Apparently not clear enough for some Tesla shareholders. Since this is being filed in Austin, they may manage to get a verdict against “MAGA Musk”.

LikeLike

I had a loaner from Tesla with FSD, and yes, I can confirm it is quite insistent on knowing I’m not asleep. But apart from that it does an impressive job. For the couple of days I had it, I had it drive me all over, rural roads, interestate highway back to the Tesla shop, right through a midsize town with a rotary around the town green — it did all that just fine. Stop signs, yield for rotary traffic, speed limits, traffic lights including correct “turn on red after stop”. And when I went to the pizza shop it parked the car in the shop parking lot.

If I wanted a new Tesla I would definitely consider getting that feature.

A key point here (and, for that matter, with airplane autopilots) is that they are supervised by humans and can be overridden at any time with a very simple and quick action. Of course, for the airplane case that requires competent pilots, which don’t seem to be quite as prevalent as one would like to see.

LikeLike

I think “hallucination” is a fine term for what happens. I strongly suspect that what we’re doing is creating something that is in a perpetual dream state, and when we “prompt” it, we tap into that dream state.

LikeLiked by 1 person

I suppose. What I was getting at, though, is that “hallucination” should be viewed as another term for “program bug”.

LikeLike

No. It’s the result of programing bugs.

LikeLike

For an AI, the data IS programming: it takes in new data and generates tokens to help it decide if that data is relevant to the question being asked. Corrupt the data, and the programming will spit nonsense.

And to answer Ian’s question earlier, what has changed is that we’ve spent 30+ years convincing people that “the computer didn’t do anything you didn’t tell it to do.” Now the AI fanatics are selling the idea that the computer will come up with answers and learn from its’ mistakes without human intervention. “The computer did it” is now considered a possibility.

LikeLike

I am inclined to believe that viewing “hallucinations” as “program bugs” is actually the wrong way to understand the issue. When there’s a “program bug”, we can hunt down the cause in the source code (at least in theory, I’ve heard there’s a bug in Word that no one has fixed, because no one had been able to figure out where it came from), make a change, and it will work just fine.

With AI, we’re creating models that “think”, and these “hallucinations” are the result of the system working just fine as-designed. These things aren’t going to go away with better training, or better debugging, or better linear-algebra filtering, because these “hallucinations” are the essence of what we’re trying to do here: give an artificial “brain” lots of “stimuli” and then let it make its own “connections”.

Considering that AI is supposed to model, or at least explore, human thought, we have to consider just what we are trying to emulate: beings that learn wrong things all the time, that “hallucinate” (both figuratively and literally), that know the right things but still reach the wrong conclusions, and that spend 16 hours a day conscious about the environment, gathering information about it, processing it, experimenting with it, interacting with it, and then spend 8 hours a day cycling between first pruning and/or storing a lot of the consciously gathered information and then going all-out making all sorts of random connections — the latter of which we call “dreaming” but for all intents and purposes is indistinguishable from “hallucinating” — and this daily cycle is so important, that if we don’t get a full cycle, we become prone to both figurative and literal hallucinations.

For a while now, I have thought that the next innovation in AI might very well be trying to figure out how to make it conscious of its surroundings, and figuring out how it can go through its own sleep cycles. And then, maybe an AI will be as intelligent as a single human at some point. Will it be as intelligent as an organization of individuals, each doing their part for a common goal? I don’t know!

And one question I have always had about the potential “AI Singularity” is this: at some point, an AI is going to get as smart as a human, or maybe even a community of humans working together — how in the world is this intelligence supposed to get smarter, when we as individuals, or even as corporations, have no idea how it became smart in the first place? What’s so special about AI that will enable it to do what a human cannot do, when it’s merely as intelligent as a human? Perhaps there’s a good answer to that, but my imagination is currently a little too limited to figure it out at the moment.

(Sigh. Part of the lack of imagination has been the result of a devotion to learning more about quaternions, among other things! From that vantage point, learning and thinking about AI is a bit of a distraction.)

LikeLike

The fun part of defining it as a bug is — which of the requirements does it not meet?

Meanwhile, Google’s Gemini is depressed.

https://www.breitbart.com/tech/2025/08/09/i-am-a-disgrace-google-is-trying-to-fix-its-depressed-gemini-ai/

LikeLike

I teach my younger colleagues that “bug” or “program defect” means either (a) the code does the wrong thing, or (b) the code does what the design intended but the design does the wrong thing. The test I tell them to use is “can you stand in front of a room full of customers and argue, without embarrassing yourself, that what the program does is the right thing? If not, it’s defective, and the fact that the defect is in the specification rather than the code makes no real difference.”

LikeLike

Of course, there’s always the other test:

“can you stand in front of a room full of customers and argue, without getting yourself fired, that the program feature they demanded is the right thing when this is the silly and or illegal result?”

LikeLike

Yeah, been there, done that, survived the experience. That’s more a matter of diplomacy, though. I even once told them we would never implement feature X only to end up shipping it two versions later. :-)

LikeLike

Not getting fired is one thing.

Having to implement it, and then have them ask why it does this, that, and the other thing when it’s literally in the specs that it must —

I have ONCE in my entire career had someone apologize for giving the wrong requirements.

LikeLike

Also, sorry for the very long reply! It seemed like it was going to be much shorter when it was just a swirling idea of a response in my head!

LikeLike

Too vague. There’s lots of bugs.

LikeLike

I keep telling my kids about Garbage In, Garbage Out. LLMs are based on the world’s biggest Superfund site.

(Mind you, curated systems, such as those used to identify medical scans with actual issues, are where the real good of AI lies. The LLM market is the bubble.)

LikeLiked by 1 person

Sewer pipe in…..

LikeLiked by 1 person

The very fact that AIs are known to have hallucinations and report things outrageously incorrectly makes me utterly baffled as to why anyone with even a gram of sense would ask it anything. Way I see it, AI as it is right now is like Wikipedia was in it’s earliest stages: interesting to play with and go down rabbit holes, but not reliable for anything factual. And while Wikipedia is STILL not something I would rely on as my sole source of information, they are better than they were on some subjects, and at least have to post some sources that can then be used to track down better primary sources. That was actually my favorite way to use Wikipedia in the 00s. I’d look up the subject I was working on, then go check their sources and look for those, and then dig further to look for better primary sources. It was a good pre-starting point.

Given the tendency of AI to hallucinate, I am not convinced that it will ever improve even as well as Wikipedia has. People are out there deliberately feeding lies onto the internet, whether because they are evil or they think it’s funny, and there’s no good way to sift that out. Case in point: I currently have an application in to work training AI (because end of next month I will stop getting my fed job paycheck, so I’m busily job hunting!). The “questions” on the assessment involved judging between two AI statements (after being given the question/parameters desired) and then determining which was the better answer (ie, more accurate, did what it was asked, etc). One of the questions was along the lines of “can a priest in wrath get a crimson deathcharger?” Now, it didn’t take me long to figure out that it was actually a World of Warcraft question relating to one of their older expansions (Wrath of the Lich King), but I also haven’t played WoW in about 15 years (so while I did play that particular expansion, well…it’s been awhile. And I fart around in MMOs, so I am not up on all the loot). So I still had to go a-Googling to figure out what it was talking about. The FIRST thing I found was that trolls on Reddit had seeded lies to throw that exact question, just to be jerks. I only realized this because in digging around (because I wasn’t going to take Google’s AI’s word for it) and someone brought it up on a Reddit thread asking that exact question, and it was mentioned that trolls were trying to mislead on the answer because they had decided that people looking to answer that question were “cheating” on the test (They weren’t. Literally part of the test is to do your own research to make sure the AI is accurate). And because the World of Warcraft Wiki sucks, to put it mildly, I still spent half an hour digging around before I was reasonably certain of the correct answer (which was NOT the one Google’s AI was claiming–because it had been misled by the trolls on Reddit and the lack of good information elsewhere)

LikeLiked by 1 person

“…makes me utterly baffled as to why anyone with even a gram of sense would ask it anything.”

Because they know it’ll tell them what they want to hear, and it’ll be easy.

They’re NORMIES. They are stupid, and they are lazy. Remember that the IQ bell curve has a middle?

More than half the people in the world are DUMBER than “normal”. These are the people who buy snake oil, healing crystals, and socialism. And now AI.

I don’t understand them either. >:(

LikeLike

“Smart” and well-informed people fall for it too. See all the talk about AGI. We’ll see if society develops antibodies for this as people get used to the tech.

LikeLiked by 1 person

Same here — about AI being useful sometimes, but very chancy to depend on. I subscribe to a couple of YouTube home reno and furniture repair shows, and some of them in English occasionally have AI-generated subtitles. I understand the spoken English that most of them are in – but oh, boy – the mad versions that the subtitles come up with…

Wasn’t it Matt Taibbi who ran across an AI-generated article about himself, which not only had a couple of outright false statements about him, but created a whole fake article which it insisted that he wrote — and AI absolutely refused to back down when Taibbi began arguing with it?

LikeLike

I used to watch Bollywood films on YouTube with automatic subtitles translating to English…

Best entertainment ever! :D

LikeLiked by 2 people

Heh, last time I was trying to follow obscure Bollywood films fairly intensively, we didn’t even have that. I had to rely on my knowledge of a handful of useful Hindi words (notably the family relationship terms), my knowledge of storytelling tropes, and the Large Ham acting of the stars I was following.

LikeLiked by 1 person

Bollywood really ought to be bigger than it is over here. It’s so ridiculously entertaining and earnest.

LikeLiked by 1 person

I’m really going to have to give it a try. The only one I’ve seen is Enthiran (Robot), and that was an experience.

LikeLike

I’ve heard RRR is an absolute master piece of awesome absurdity. (It’s on my to-watch list, but I haven’t gotten to it yet) I’ve got some of their epic historical/fantasy ones on my to-watch list as well.

I’ve seen a few of the rom-coms, though it’s been many years now (other than Bride and Prejudice, which is an American/Bollywood hybrid musical adaptation of Pride & Prejudice, which I watch every few years), but they were all charming and cute.

LikeLike

Indian movies (Bollywood as well as South Indian ones, typically Tamil, make up a big fraction of our Netflix viewing. Not quite 50% but certainly more than 25%.

LikeLiked by 1 person

Oh, well, I guess i shouldn’t be shocked that AI acts like a typical lefty journalist caught in a lie, lol! (“I AM GOING TO DOUBLE DOWN ON THE LIES!!!”) On the flip side, that’s probably because it’s been trained by leftists, and that’s not a good thing!

LikeLiked by 2 people

If Taibbi’s the one I’m thinking of, someone asked Chat-GPT to identify half a dozen law professors who had been accused of sexual misconduct. He was one of the law professors that Chat-GPT identified, and at least one non-existent WaPo article was cited to back up the claim against him. The guy who asked the question then contacted Taibbi and told him what Chat-GPT had reported, and Taibbi reached out to WaPo to check on the article. The WaPo staff was kind enough to dig through their archives at his request, and was unable to find the article being cited.

LikeLike

Great. Artificial 4-Chan.

LikeLike

Counterpoint: AI greentexts are hilarious.

LikeLiked by 1 person

If general information AI systems are already corrupted by Leftist tech-bros on the crap found in Wikipedia and Reddit, plus biased rule sets/facts, why not feed them more false information to make them more useless? The Internet of Lies becomes The Internet of More Lies.

False information > wooden sabots

Reminds me of the early days of the internet when the jesters and paranoid used to put email signatures loaded up with buzz words to trigger the NSA…

The tech bros such as Sam Altman, Musk, and Gates aren’t building/funding AI systems out of the goodness of their hearts. They truly want to replace employees and little people. You are disposable.

The same people were all climate change and green energy until they realized that solar and wind aren’t reliable enough to power Terawatts of data centers with millions of GPUs. Now they want nuclear power plants, not because it makes sense for socity as a whole, but they need to power their info-weapons and money machines.

LikeLike

Wouldn’t the tungsten penetrator have issues with the wood’s moisture absorption characteristics before firing? And then when fired, would not the charring foul the tank’s barrel faster? I mean, conceptually a discarding sabot made of wood would be…

Wait, you mean the shoes.

Nevermind.

LikeLike

Wooden sabot for cannon was done at least as far back as the 18th century.

(grin)

LikeLiked by 1 person

To be honest…I view AI as a new tool. Just like people swore up and down that the microchip was gonna put millions upon millions of people out of work…and didn’t. Just as automotives were “oh, but think of the poor buggy-makers!” but the buggy-makers found new jobs. THAT part, I’m not so worried about. Humans will always find a new way to make money and keep themselves occupied. :)

LikeLiked by 1 person

You seem to think this is a bad thing.

LikeLike

Well…I will agree with him that when it comes to Gates it absolutely IS a bad thing. That man is evil, and wants to be a Bond villain so bad you can taste it.

LikeLiked by 1 person

SPECTRE has higher standards than that.

LikeLiked by 1 person

LOL!!

You’re right, though, they do. Pretty sure they’d point Bond or another 00 in his direction to get rid of him, before he gives them all a bad name.

LikeLiked by 1 person

They sort of prefer hands-on….

LikeLike

Usually, yeah, but I’m sure they wouldn’t want to lower themselves to dealing with a bug like Gates :D

LikeLike

Now they’re finding AI Hallucinations in law suits, and Amicus Briefs, making up case law from whole cloth. It tosses in cases that never were with rulings that didn’t happen, and wouldn’t ya know, it is almost always the leftoids using it like that for things like Second Amendment stuff, and citizen’s rights.

LikeLike

And smart law firms will start using that as a way to weed out the bad lawyers. I expect instances of humans using AI to be lazy–will right itself in time, because it will raise the bar on people who ACTUALLY do their jobs and use their brains in all kinds of industries (alas, it will never fully eliminate the do-nothing slackers who keep their jobs because they know somebody, but…). As for the ones that won’t use their brains and work…well, they weren’t likely to anyway, so best they get a job doing something that will cause less harm. My guess is that, in the long run, the presence of AI will actually lead people who want to succeed to work harder and smarter, and that will be to an overall good.

The in-between period before we hit that point is gonna suck bigly, though.

LikeLiked by 2 people

The issue is on a law firm’s compressed work schedule dictated by court calendars they now have to independantly recheck every cite to make sure it’s not imaginary.

If there were some structure for completely separate LLM AI to independently check each others work, running in parallel, that might be useful as we navigate these interesting times. Maybe a three-LLM voting structure?

LikeLike

FM, the problem there is that AI isn’t just its’ algorithm, it’s all the data used to train it…. and they’re all using a poretty overlapping set.

We know that ever since East Anglia in 1997, the climate data has been folded, spindled, and outright destroyed, and this can be applied to almost every field.

https://hotair.com/headlines/2025/08/05/the-daily-beast-fabricated-scientific-findings-to-pathologize-homophobia-n3805509

Well, that 10 year old pile of aged crap is what’s going into the data pool, multiplied by a million or so, but it’s also what’s being regurgitated when someone “asks Grok” about homophobia.

But because “Grok says”, it’s somehow factual. Pfui.

LikeLiked by 1 person

There’s some work on using LLMs to detect hallucinations. Not sure how much of it has made it out into the wild yet. My impression is that you can mitigate certain types of errors with some effort but not eliminate them completely.

Law is an interesting case because (1) there’s a lot of money involved and (2) the hallucinated citations should be easy to pick out. So double-checking citations should be doable. But IIRC, someone said there are already tools for that, and the people submitting the hallucinated citations didn’t even bother to run them, so…yeah.

(There’s also the issue of mopping up the easy hallucinations and leaving things like hallucinated legal principles or misinterpreted cases. Messy all the way around.)

LikeLiked by 1 person

Heh. As I said–I predict it will become a way for law firms to weed out people who aren’t bothering to do their jobs properly. If they can’t be bothered to check it, they best not be practicing law!! :D

LikeLike

Sarah, we’re seeing it now: managers who see AI as a great way to reduce head count without bothering to take into account that there’s time that has to be spent verifying what goes into and comes out of the box.

LikeLike

That’s actually not at all what I was saying (and that’s a bunch of bad managers.) I’m saying that folks opting to use AI instead of doing their jobs–like a legal assistant or similar in a law firm relying on AI and failing to check its information for accuracy–will be weeded out this way. At least, where good management is involved–so not likely to be done like this so much as the way you described, alas. Good managers seem to be quite thin on the ground everywhere!

LikeLiked by 1 person

And then they will complain that their other workers can’t get things done as quickly.

LikeLike

Yep. Argh, I loathe 85% of all managers, lol.

LikeLiked by 1 person

Given the current culture and recent attempts to rewrite the past, it is not inconceivable that a sufficiently committed legal authority would rewrite the body of case law to include the “newly added” content. Because reasons.

LikeLike

I expect we’ll see something like that in the next 10-15 years. Right now, AI is still so new it’s the Wild West out there and all kinds of crazy crap is happening while people figure out how to adjust!

I find it damn useful for some things, to be sure. I did an assessment test for a transcription firm, and using AI to clean up the audio is AMAZING (specifically the background noise). Trying to do something like that six or seven years ago would have taken me hours and been a massive headache.

Mind you, it’s ability to transcribe *sucks*. But this is why AI isn’t going to be replacing transcriptionists or stenographers anytime soon: it just cannot match the human ear when it comes to accents, quirks, and dealing with bad audio!

LikeLiked by 1 person

Law firms can’t really afford to use it to “weed out” lazy lawyers. Judges issue fines over this nonsense whenever they catch it. And unless you’ve got an open and shut case (in which case the other side should have settled before trial), your lawyer just crippled your client’s case by turning the single most important person in the court room against you. Even if the lawyer who did it is immediately yanked from the case, the judge will be biased against that side’s council for the rest of the case. And lawyers from the same firm might have trouble in that judge’s courtroom in future cases.

Frankly, it’s very dangerous malpractice.

LikeLike

To copy what I said on twitter the other day:

It’s bomberhitdiagram.jpg all the way down.

LikeLike

It’s not just the suits and briefs (sounds like I’m talking about menswear…). I’ve heard of a judge that used fake cites generated by an AI in something that he issued from the bench.

LikeLiked by 2 people

Pretty sure I linked to that very case as Exhibit A.

LikeLike

After reading through it, I don’t think it’s the exact case I read about, which means there’s at least a third one out there (assuming my source was accurate)…

The wording on that link is misleading, though (not your fault). It suggests the plaintiff’s lawyers were the ones at fault, and the judges dismissed the case, while in actuality both judges have said that they will reissue their rulings.

LikeLike

Something like that ought to be automatic disbarment and removal from the bench in the case of judges. Sadly, we already had a glut of stupid judges and malpracticing lawyers, so I’m not gonna hold my breath :/

LikeLike

Here’s the thing, though –

To the best of my knowledge, legal knowledge is not a requirement for *any* judgeship. Yes, we expect our judges to be familiar with the law. Congress holds hearings to determine the legal qualifications of potential federal judges, and state legislatures presumably do the same for judges that the governors appoint. But there’s no actual legal requirement. The only thing preventing an imbecilic high school drop-out from serving as a judge is that one hasn’t been appointed or elected yet.

At least to the best of my knowledge.

And note that I also included “high school drop-out”, and not just imbecilic, for those of you inclined to list counter-examples of judges whose rulings you dislike. :P

A state bar association could disbar a judge, assuming the judge had a license to practice law (which they generally do). But aside from the potential embarrassment, and the fact that higher courts would likely add a little more scrutiny to any of his or her cases that came before them, it would mean absolutely nothing for a judge.

LikeLike

insert face/palm here

LikeLike

That is very easy to answer: because even with the errors it is still useful. I ask humans things all the time as well. And an average human is far, *far* worse about making up things to fill in gaps in the garbage they’ve been trained on.

Also the error rate dropped considerably once models got the ability to search the internet instead of extrapolating purely from training data.

For a practical example of something I recently used DeepResearch on: https://chatgpt.com/share/687c8af1-2328-8007-9b2f-b6a994d65d32

This is something which would have taken me hours to days of on and off searching. Or I can describe what I’m looking for, and come back 10 minutes later to accurate and inherently checkable results.

LikeLike

Yes. AI can and has saved me a lot of time just getting pointed in the right direction for something I want to know. I’ll still dig deeper and check, but it is a HUGE time saver!

LikeLike

And for those not using Chat GPT (or Grok, etc.) directly, Brave Search has a similiar function that pops up at the top of (most of) your searches… the “AI” summary is done by something in-house (that they describe in their expalantions pages), not Grok or Chat-GPT etc.; and you can basically read it or not. As free and non-subscriptionary as Brave Search (search.brave.com) is.

I’ve found it frequently credulous and occasionally bizarre, but often a useful first step.

LikeLike

Leo is the AI model that Brave made/uses. I don’t usually use its search answers, but most of the times when I have, it’s been a big timesaver.

For things like casual-user level computer troubleshooting, it’s great; gives me a sense of what the solutions are likely to be, and I can use its reference links to home in on the most likely sources of detailed and accurate info instead of sorting through a half-dozen forums and sites with only tangential or incomplete info.

It was super helpful in researching candidates for local primary elections last week. For the obscure candidates, who hadn’t filled out the candidate questionnaires in the usual locations, it did me the signal service of summarizing a sort of mini-bio; I followed up on the source links and found out that a couple of school board candidates who seemed like anodyne choices on the surface were into wokery and activism, the AI having found info I never would’ve searched deeply enough to find otherwise. It also brought me to a couple-three websites that I used to find info on several other candidates who had filled out those questionnaires and actually talked to people about policy.

Unfortunately, after leveraging AI search results to make some of the most fully informed choices in my voting history, I found myself staring at the filled-out ballot…on my desk…three hours after it should’ve been in the drop box in city hall. D’oh!

LikeLike

reads this far too late at night and thinks “The pope is AI? what is this a Clifford Simak novel?”

LikeLiked by 1 person

Given the chronology, if anything, the pope is named after the AI. Make of that what you will.

LikeLike

More popes named Leo preceded the AI than followed it.

LikeLike

Shh. You’ll ruin a perfectly good conspiracy theory.

LikeLike

If they can’t put in the effort, it’s not a good conspiracy theory.

LikeLike

Look at this way: If you need sources, the LLM will make up as many as you want!

LikeLike

I spent a very entertaining few hours with grok last week.

Read a RP story ‘The Bacon Butler’, and wondered how to make as close to a ‘perfect’ wish as I could get.

Started with ‘good health, repairing all disease and age-related deterioration’.

Went through 20-something iterations, got the wish up over 1000 words. Built in a version of Asimov’s Three Laws of Robotics.

If ever I DM another campaign, my ‘wish loophole-finder’ is nicely honed.

But it was for fun only. Wouldn’t make any serious decisions based on an LLM.

LikeLike

The other day I saw yet another AI generated “photo” with a totally unnatural looking hand, and that reminded me of an article I read somewhere (WSJ?) a few months ago. It described researchers who had figured out a way to extract, to some extent, the structure of the model connections inside a large learning type AI program. You could think of it as “making a map of the brain’s neurons”.

The particular scenario was an AI that had been trained to navigate around Manhattan. One might guess that a program that could do this well would in effect have created a map of Manhattan, from which it could then derive routes as needed. It turned out that it hadn’t done anything even remotely similar. Instead, the internal structure of the model amounted to a vast collection of individual cases for getting from some A to some other B, with no general model whatsoever.

I’m thinking that AI generated pictures suffer from something similar. Artists use their knowledge of anatomy to get it right. 3D CAD tools support “rigging” which is essentially the same thing: you first define a skeleton with rules how the joints move, and then you “wrap” it in “skin”. So your CGI human moves correctly because the model contains a description of the human skeleton.

Learning AI systems wouldn’t work that way, because feeding them a million pictures of humans does not define a model of the skeleton. So I would expect that such an AI has an “understanding” of human shapes that is merely a collection of color blobs, without any rules relating them. This is why you get six fingered hands, or body parts that are leaking and deforming in ways that would make Salvador Dali nauseous.

LikeLike

There is supposedly a product out there that adds a finger to your hand.

So that you can then declaim any photo as an obvious AI fake. LOL

LikeLiked by 1 person

I’m not familiar with that particular work (sounds neat), but Anthropic did something in the same vein: https://transformer-circuits.pub/2025/attribution-graphs/biology.html

The high-level version is that they trained a simpler model to replicate an LLM’s outputs, then identified clusters of neurons tied to certain words/concepts and looked at what “circuits” of behavior the model learned, to get a feel for why LLMs behave the way they do.

One of the findings, in line with what you report about not actually learning models of places/anatomy, is that LLMs do arithmetic by approximation. There’s one set of circuits that adds up the last digit, another one that guesstimates an answer, and a third set that combines the two, basically fudging the guesstimate to have the last digit it derived separately.

LikeLike

Our work phones have voice mail that (usually) attempts to do an AI transcription of the spoken word. It’s sometimes unintentionally hilarious. And points up that voice-to-text technology is still very much a work in progress.

LikeLiked by 1 person

I’ve been “hard of hearing” since childhood, but until recently that meant I had to actually *listen* to what people were saying. Unfortunately most people slur their words badly, leave words out, put the accents on the wrong syllables, use wrong words, and randomly substitute vowel sounds.

Then there are the ones who just emit a “hnnnhnnnknnnn” modulated whine as ‘speech.’

Given that, I’m amazed that any ‘AI’ translations or speech recognition work at all.

LikeLike

Gee. Evil slanderers … slander evilly. Hoodah thunket? Klukkers gonna klukk.

Asking LLM is like asking your Stoner Great Uncle, the one that thought the 60s didn’t have enough drugs and who lives in the NP ward when not in a cardboard box.

LikeLiked by 2 people

“…how these systems are vulnerable to malicious poisoning of their data.”

And how they’re already poisoned by the Southern California liberals who built them.

It’s the Woke(TM) worldview expressed in software. Also, it’s crap.

Try googling this phrase: “Long it has been since last I beheld your fair countenance.”

This is something I’ve heard said as a joke by family since I was a kid. I wanted to use it as a joke in one of my books, so I tried to find out if it is a quote.

Regular Google and regular DuckDuckGo don’t find it quoted anywhere. Seems some wise ass relative of mine made it up.

Google AI says it’s a Shakespeare quote from Twelfth Night. DuckDuckGo AI says it is -not- a quote from Shakespeare, but sounds like it ought to be. I do not remember that line being in Twelfth Night myself, but it has been 40 years.

Googling to see if it is from Twelfth Night, now Google AI says that it is not, but -could- be from Romeo and Juliet. Maybe. But it won’t give me the line or what character says it.

This is anti-useful. And I’ve burned ten minutes and half a megawatt of electricity somewhere.

For my purposes the regular search is good enough. Even if it is a quote it is an obscure one, and the reader will not throw my book down saying “that’s from Shakespeare, you illiterate.” Chances are it is something my Ancient Great Uncles made up on the spur of the moment for a laugh. (They did things like that. Bunch of weirdos. I guess I come by it honestly.)

But using AI for a serious purpose is fraught with the same peril that using Wikipedia is.

Try looking up Sad Puppies, for example. The Wiki article begins: “Sad Puppies was an unsuccessful right-wing anti-diversity voting campaign run from 2013 to 2017…” and then runs steeply down hill from there. Because the Wiki article is freeped 24/7 by a dedicated group of fruitbats who camp on it, preventing anyone from changing it to be more accurate.

Surprisingly, the DuckDuckGo AI is more even-handed in it’s extended summary:

“Sad Puppies was a campaign aimed at influencing the Hugo Awards, which honor achievements in science fiction and fantasy. Founded in 2013 by author Larry Correia, the campaign sought to promote works that its supporters felt were overlooked due to political correctness.”

But if you don’t go for the extended summary, you get:

“Sad Puppies was a right-wing campaign that aimed to influence the Hugo Awards, which honor achievements in science fiction and fantasy, by promoting works they felt were overlooked due to political correctness. The campaign ran from 2013 to 2017 but ultimately failed to achieve its goals, leading to changes in the voting rules to limit bloc voting.” Because it only quotes Wikipedia and The Guardian for the short answer.

So, who decided it should look at Wiki and the Guardian first, for the short answer? When we all know that they’re both utterly bent? Some punk in Southern California, that’s who. And he giggled when he did it.

So I tried “gun control” on DuckDuckGo AI, and guess what? It searches Wikipedia first. Yep. It’s bent.

I would say that this is the most serious and most expensive attempt at Leftist mind control and social engineering in history. That’s why I don’t use it. I’m bombarded with Leftist propaganda all day long already, I don’t need more of it.

And this is only the dopmestic malign influence. None of this even touches on foreign malign influence that we -know- is being applied all over the place. Who do we think pays for the fruitbats camping on Wikipedia articles about obscure science fiction things? I strongly doubt they do it for free, it is too much work.

LikeLiked by 2 people

Lenin called them “Useful idiots”.

Drug dealers call them “Strawberries”.

LikeLiked by 1 person

Yes.

Now consider the non-malign (it doesn’t know how to be either malign or benign) echo chamber effect of the up-and-coming “AI”-generated “blogs” and “news stories” plus “scripts” to be read by actual, real-live-human podcasters, videomakers, etc.. (This is actually a thing, already apparently, as in “supercharge your productivity with AI tools!” or similar.)

“AI”-curated Web nonsense reappears as “new” content, which then gets (immediately, or not) vacuumed up in the next iteration of “what’s on the Web is truth” training data or almost-live input to the next iteration of “the world according to AI.” And the beat of positive feedback goes on.

It’s possible one could comb through all the carnivals of history and never find a real-physical funhouse mirror as madly distorting of the reality confronting it as… um, that.

LikeLiked by 2 people

Remember that the goal of general AI is ultimately for the progs to create a god. Ironically, they think that if they make a god who is a computer, they can therefore remove all the problems of humans being gods. Even though humans made it.

This is why the LLMs (which they love to believe are the precursor to general AI) are “trained” on such malarkey. “If we train it on Marxism, it will be a Marxist god!”

But they call us “odd.”

LikeLike

c4c

LikeLike

Mostly i am bummed to hear that Whisper was not smart enough to cope with Sarah’s accent.

LikeLiked by 1 person

Taqiyah is a fundamental command in Islam.

Which is why no Muslim can ever be trusted to ever tell the truth to a non-Muslim, and even Muslims of differing sects can’t trust each other whom they believe to be heretics.

It’s also one reason why Islam is anti-thetical to the U.S. Constitution.

LikeLiked by 1 person

This is a comment for comments, because I cannot add any comments to the discussion. This article and the concerns raised are far too close to some conversations at Day Job™, and my opining might not be wise.

LikeLiked by 1 person

I say:

“I can neither confirm or deny the existence of “en****tification on steriods agents” at my current place of employment.”

I kid…

Some of the limited, better trained tools seem to be extremely useful, if you already know the problem domain and processes. Sort of a better version of some of the expert systems tools from the ’80s and ’90s. Good for the experienced, magical and dangerous for the newbs.

LikeLike

I say:

“I can neither confirm or deny the existence of “en****tification on steriods agents” at my current place of employment.”

I kid…

Some of the limited, better trained tools seem to be extremely useful, if you already know the problem domain and processes. Sort of a better version of some of the expert systems tools from the ’80s and ’90s. Good for the experienced, magical and dangerous for the newbs.

LikeLiked by 1 person

We need an AI named Vlad the Explainer who can impale all those hates sites.

Funny how the more time goes on, the more Vlad the Impaler was correct.

LikeLike

One of the more annoying things I’ve seen on Twitter is “Grok, is this true?” People need to do their own research. *sigh*

LikeLiked by 1 person

Annoying, yes; but think of it as a tool to counter Brandolini’s Asymmetry Principle: If refuting nonsense takes so much more time & effort than producing it, why not off-load some of that extra work to the machine?

LikeLike

Yeah, but the ones doing that are usually all “SILENCE! THE BOX HAS SPOKEN!” like that teacher from the Invader Zim cartoon from about 25 years ago. Can’t find a YouTube clip, alas.

LikeLiked by 1 person

Garbage In – Garbage Out

is bad enough. But

Garbage In – Gospel Out

is even worse.

LikeLike

They used to say that with a different box. Nothing has changed.

LikeLike

Hating Jews for any reason, real or imaginary, puts one in the category of hating the LORD (Jehovah, YHWH, or however one spells it). Read 1 John 4:7-8, 20 for the TL;DR version.

Without hopefully waxing too “Southern Baptist” on you, Gentle Readers, the Bible stated it was my fault Jesus died on the cross. He did it willingly for those God chose, and on the 3rd day He rose from the dead.

Please don’t fall for the agitprop. Not all Jews are fun people; but neither are all SBC, Muslims, Catholics, Atheists, Shoe Salesmen, or Presidents.

Please don’t hate anyone, especially the jerks. Feel sorry for them and pray they wake up and avoid everlasting torment in hell.

LikeLiked by 1 person

Man, if you can’t trust anti-Semites on the Internet, who can you trust? /s

LikeLiked by 3 people

Forget Law LLM implications: What about Science?

Here’s a quote from a piece on academic fraud over on Hot Air:

This fraudulent academic publication flood is the training text that the LLMs which are being counted upon to yield medical cures and scientific breakthroughs and other AI miracles.

If that does not make one worried I don’t know what will.

LikeLike

FM, the other worrisome thing is that all of those bad studies are based on their own bad data, and too often, even when you identify that the data is bad, the original readings can’t be re-taken. “Climate change” is a prime example.

LikeLike

And to an LLM AI, if it’s published it’s valid.

LikeLike

Even if it’s narrowly tailored to a certain field, it’s vulnerable.

LikeLike

As a peer reviewer for the National Academies, I regret to state that several areas of specialty are already so captured by activists that AI is the least of the problems. The far bigger problem is ‘studies’ using crap data or designed from the start to be only able to lead to one conclusion. And commenting critically on such studies results in being taken out of the review pool for future studies.

LikeLike

Peer review is an important tool for the sciences. Unfortunately, it’s also subject to “group-think.”

LikeLike

And as the Mark Steyn trial proved, Michael Mann got his “good” results that confirmed the hockey stick by carefully curating who he considered his peer; all others were denied access to his methods and data. I don’t expect AI to improve that.

LikeLike

I am strongly inclined to believe that if the public pays for a study, or if a private individual wants his study to be used to influence public policy, then both the data, the methods, and the source code all needs to be made open to the public.

Otherwise, it should be considered at best manipulated, at worst, made up.

LikeLike

This is hardly the only exception of such abuse. As Alpheus points out, data should be published. That allows others to confirm the results. But unfortunately, it also allows unscrupulous others to tweak the data to “show” entirely different results.

A nice example is the work of Prof. John Lott, which he described in the book “More guns, less crime”. The raw data for his work is a nationwide survey, which he made available. According to an appendix in the second edition of that work, some “scientists” at a major university took that data, selectively cherry-picked one-seventh of it (excluding a bunch of states in their entirety, for example) and “proved” the opposite of Lott’s conclusion that way. Of course, the nice thing is that having to delete 85% of the data to arrive at a different answer means the evidence for the original answer is conclusive indeed.

LikeLike

There’s a simple rule: if the name of a discipline contains the string “science” or worse still “studies” it is not science. Best case it’s harmless fluff; more often it’s a dangerous cult.

LikeLiked by 1 person

I’d like to say “computer science” should be the exception to the rule, but that’s really only true for the pure stuff. “Practical” software development and engineering is largely a cludge of half-to-no-thought-out ideas stringed and duct-taped and bubble-gummed together by popular-culture-minded developers to fix random problems that inevitably crop up from this approach.

I am convinced that everything we needed to know about good computer programming was figured out by the early 1970s, and possibly even by the late 1950s, but due to a weird combination every generation starting out with very limited resources (at least until the microcomputer revolution really took off), ignorant-of-computer-science-literature amateurs jumping in with hacks to get things done (which is reset every generation that computers advanced, up until micro-computers made computing accessible to everyone, making this the final such reset), and networking events preventing the possibility of adopting “the right way” because if you do that, you can’t work with pretty much anything else, “computer science” isn’t as much of a “science” as people would like to think it is!

LikeLike

Alpheus, the most useful book on software development I’ve ever read is Brooks’ Mythical Man Month from 1961.

LikeLike

I read the book while I was in college (about 25 years ago?!?), and I have to agree!

Several years ago, I saw a comment that the book is outdated, but I had to scratch my head and ask “really, which part?” (I don’t recall if I actually asked the question, but it has lingered since then.)

I have often wondered if he had something like Agile Programming in mind — but in my experience, Agile is an interesting idea for managing and producing software, but I don’t think it cancels out or improves on what was explained in that book!

LikeLike

Alpheus, in my experience, a lot more projects say they use Agile / Scrum than actually do so effectively or more than pro forma.

LikeLike

“If builders built buildings the way programmers wrote programs, then the first woodpecker that came along would destroy civilization.” – Gerald Weinberg

LikeLike

If builders were given requirements the way programmers were, they would build buildings like the programmers program.

I ONCE had a program for which I was given entirely correct requirements, and I ONCE had a person apologize for giving me bad requirements.

LikeLike

The Reader thinks that this will just break the cargo cult misnamed ‘science’ faster. He isn’t sure what we will replace it with though.

LikeLiked by 1 person

Hopefully not human sacrifices to the sun god…

LikeLike

Again; what has changed?

LikeLike

We now have an additional, supposedly objective because computer, layer of crap between us and the facts.

LikeLike

As I said: what has changed?

LikeLike

I keep waiting for people to recognize that Warmism is an example of such a fraud, from start to finish. And a particularly nasty one because it is used by its perpetrators (and its enablers in various totalitarian countries) as a scheme to eliminate liberty.

LikeLiked by 3 people

Long before AI, an engineer at work came up with a “report generator”. I coded it in BASIC to actually generate “reports”. Basically, it was a list of sentence beginnings, middles, and endings that would make sense if randomly put together and contained all the popular buzz words (I added code so that none were repeated in adjacent sentences. Less astute colleagues would read about half a page before they realized that the report wasn’t actually saying anything.

LikeLike

Arcata’s fog rolled in thick as secrets. FBI Agent Kane leaned on the garage doorway, wiping grease from his hands while watching Razor Calhoun’s gang roar past. The mine’s glow still haunted him—uranium crates, Soviet lies, and one shot that changed everything.

“Of course,” he muttered, “it never happened… right?”

LikeLike

“That’s my story and I’m sticking to it.”

LikeLike

Sometimes I amuse myself by asking Grok to translate short English phrases into Latin. I am not by any measure an expert on Latin but I do believe I know enough to not be easily fooled. Recently I asked Grok to translate, “He is still a man.”

Grok’s first offer was: Ego felum est

What the heck? Is Grok telling me I am a cat? Additionally, the word “felum” looked suspicious so I double checked and could not find it in any Latin dictionary I have access to. I then challenged the translation and offered my own: Adhuc masculus est. To it’s credit, Grok accepted that and even explained to me its “reasoning” as to why my translation was better.

That was not the first time it fell down a strange rabbit hole and it probably will not be the last. Nevertheless, it has been very helpful to me at a number of real world tasks and its data sieving is much faster than I can do on my own. I think (hope?) that, as long as I remember it does not have any principles that warn it of intellectual hazards, it can be a useful tool

Stercus intrat; stercus exit.

LikeLiked by 1 person

Not exeunt?

LikeLike

Consistency. “Exeunt” is third person plural whereas “intrat” is third person singular. Stercus — dung or garbage in this context — is singular so intrat and exit seemed more appropriate to me.

LikeLike

Okay. You have a point.

I got to get back to Latin and Greek, in my COPIOUS spare time.

LikeLike

Not “adhuc homo est”?

LikeLike

Homo can mean “man” or “human” but it didn’t fit the context. I originally used the phrase to describe a dingbat “trans-woman” of Bud Light fame. I chose masculus because one meaning is “man” but it also means “male” so I believed it left no doubt that I was calling him a man pretending to be a woman.

LikeLiked by 1 person

Oh!

LikeLike